cURL in Mac OS X

cURL is an easy to use, cross platform URL transfer library. It makes it very easy to download web pages so you can parse some information out of them in your application.

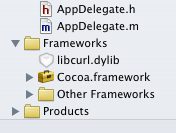

You can find pre-built binaries for just about every platform imaginable on the download page. Once you have downloaded and installed libcurl, it is very easy to get started. Add libcurl.dylib to your Xcode project to link to libcurl. You can put the cURL setup and cleanup in the AppDelegate as seen below.

#include <curl/curl.h>

@implementation AppDelegate

- (void)applicationDidFinishLaunching:(NSNotification *)notification {

curl_global_init(CURL_GLOBAL_ALL);

}

- (void)applicationWillTerminate:(NSNotification *)notification {

curl_global_cleanup();

}

@end

Now that cURL is all setup to use, you can download a file in memory and parse it for some information. The download code is taken from this example.

struct MemoryStruct {

char *memory;

size_t size;

};

static size_t WriteMemoryCallback(void *contents, size_t size, size_t nmemb, void *userp) {

size_t realsize = size * nmemb;

struct MemoryStruct *mem = (struct MemoryStruct *)userp;

mem->memory = realloc(mem->memory, mem->size + realsize + 1);

if (mem->memory == NULL) {

/* out of memory! */

printf("not enough memory (realloc returned NULL)\n");

exit(EXIT_FAILURE);

}

memcpy(&(mem->memory[mem->size]), contents, realsize);

mem->size += realsize;

mem->memory[mem->size] = 0;

return realsize;

}

+ (NSData*) downloadFileToMemory:(NSString*)url {

CURL *curl_handle;

struct MemoryStruct chunk;

chunk.memory = malloc(1); /* will be grown as needed by the realloc above */

chunk.size = 0; /* no data at this point */

curl_handle = curl_easy_init();

curl_easy_setopt(curl_handle, CURLOPT_URL, [url cStringUsingEncoding:[NSString defaultCStringEncoding]]);

curl_easy_setopt(curl_handle, CURLOPT_WRITEFUNCTION, WriteMemoryCallback);

curl_easy_setopt(curl_handle, CURLOPT_WRITEDATA, (void *)&chunk);

curl_easy_setopt(curl_handle, CURLOPT_USERAGENT, "libcurl-agent/1.0");

curl_easy_perform(curl_handle);

curl_easy_cleanup(curl_handle);

NSData *data = [NSData dataWithBytes:chunk.memory length:chunk.size];

if(chunk.memory)

free(chunk.memory);

return data;

}

You can see that first you create a curl_handle and set the options that you need on it. You provide the URL, the WriteMemoryCallback function and the MemoryStruct to store the data received. Once you setup these options, call curl_easy_perform(curl_handle) to actually get the data and curl_easy_cleanup(curl_handle) to finish up. Since this is on Mac OS X, you should put the raw data into an NSData object to send to the rest of the application. Create an NSString like this:

NSString *pageSource = [[NSString alloc] initWithData:dataReceived

encoding:NSISOLatin1StringEncoding];

where dataReceived is the return value from the above method. Make sure you use the right string encoding or you will get some strange results.

Now that you have the page source, you can parse it for some information. This is a method to find the next page link on a Blogger formatted blog.

// Search for next page link

// Returns the string with the next page link, or nil if it doesn't exist

+ (NSString*) parseForNextPage:(NSString*)pageSource {

NSScanner *scanner = [NSScanner scannerWithString:pageSource];

[scanner scanUpToString:@"<span id='blog-pager-older-link'>" intoString:NULL];

if (![scanner isAtEnd]) {

[scanner scanString:@"<span id='blog-pager-older-link'>" intoString:NULL];

[scanner scanUpToString:@"href='" intoString:NULL];

if (![scanner isAtEnd]) {

[scanner scanString:@"href='" intoString:NULL];

NSString *link;

[scanner scanUpToString:@"' id" intoString:&link];

link = [link stringByReplacingOccurrencesOfString:@"&" withString:@"&"];

return link;

}

}

return nil;

}

You can use an NSScanner to scan through the page source looking for a static string that always appears before the next page link. If you find that, you can go on to find the next link on the page and return it. This surely isn't the most elegant solution, but it's quick and easy, and sometimes that is all you need.

You can see that cURL is very straightforward and easy to get started with. It's a great addition to your toolkit for automating all kinds of web based activities.

For more information, see the documentation on the cURL website. Especially helpful are the numerous examples.